If you found these codes useful buy me a beer

Matlab - Working with Videos

Aresh T. Saharkhiz - Matlab Video Analysis

Friday, June 12, 2020

Thursday, June 23, 2011

The Euclidean Distance/Vector Angle Edge Detector (DRAFT)

This Edge detector makes use of color information to detect edges. Where most edge detectors use only intensity, this edge detector also considers hue and saturation.

Algorithm for Euclidean Distance/Vector Angle Edge Detector:

We use a vector Gradient edge detector which is a 3×3 mask. Find E_vg the euclidean distance operator

R = double(I1(:,:,1));

G = double(I1(:,:,2));

B = double(I1(:,:,3));

I_size = size(I1(:,:,1)); E_vg = zeros(I_size(1),I_size(2));

for y=2:(I_size(1)-1),

for x=2:(I_size(2)-1),

for i=-1:1,

for j=-1:1,

ED = sqrt((R(y+i,x+j)-R(y,x))^2 + (G(y+i,x+j)-G(y,x))^2 + (B(y+i,x+j)-B(y,x))^2);

if ED > E_vg(y,x)

E_vg(y,x) = ED;

end

end

end

end

end Run the resulting E_vg through a threshold

ED_thresh = 45.0; //Change for Testing upto 255

ED_edges = zeros(I_size(1),I_size(2));

for y=1:I_size(1),

for x=1:I_size(2),

if E_vg(y,x) > ED_thresh

ED_edges(y,x) = 255;

end

end

end

Algorithm for Euclidean Distance/Vector Angle Edge Detector:

- For each pixel in the image, take the 3×3 window of pixels around it.

- Calculate the saturation-based combination of the Euclidean distance and Vector Angle between the center pixel and each pixel on the ring of the 3×3 window.

- Assign the largest value obtained to the center pixel.

- Apply a threshold to the image to eliminate false edges

We use a vector Gradient edge detector which is a 3×3 mask. Find E_vg the euclidean distance operator

R = double(I1(:,:,1));

G = double(I1(:,:,2));

B = double(I1(:,:,3));

I_size = size(I1(:,:,1)); E_vg = zeros(I_size(1),I_size(2));

for y=2:(I_size(1)-1),

for x=2:(I_size(2)-1),

for i=-1:1,

for j=-1:1,

ED = sqrt((R(y+i,x+j)-R(y,x))^2 + (G(y+i,x+j)-G(y,x))^2 + (B(y+i,x+j)-B(y,x))^2);

if ED > E_vg(y,x)

E_vg(y,x) = ED;

end

end

end

end

end Run the resulting E_vg through a threshold

ED_thresh = 45.0; //Change for Testing upto 255

ED_edges = zeros(I_size(1),I_size(2));

for y=1:I_size(1),

for x=1:I_size(2),

if E_vg(y,x) > ED_thresh

ED_edges(y,x) = 255;

end

end

end

Wednesday, December 22, 2010

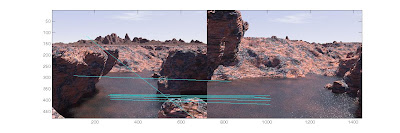

Region Of Interest using Optical Flow

Shaking VS Stable

Tracking using Lucas Kanade Optical Flow

the ROI (region of interest) is selected manually by the user

GroundTruth Tracking

ntxy Ground truth

ive used the following code to create a manual tracking using mouse clicks

the following is the code used:

%Ground truth tracking

picnum = 60;

fig = figure;

hold on;

points = cell(1,1);

buttons =1;

while (buttons < 4) %track 3 objects only

tframe=1;

for tframe = 1:picnum

filename1 = sprintf('./video/%d.pgm', tframe);

title('Click the top left corner of an object to track...');

[x1 y1 button] = ginput(1);

points{buttons,tframe} = [x1 y1];

if (button~= 2)

plot(x1, y1, 'rx');

figure(fig)

cla;

imshow(filename1);hold on;

%comment out to remove the need for unwanted empty points in

%the cell

% else

% break;

end

end

buttons=buttons+1;

end

hold off

imshow(sprintf('./video/%d.pgm', 1));hold on;

x=[0 0];y=[0 0];

for i=1:picnum-1

x = [points{1,i}(1) points{1,i+1}(1) x];

y = [points{1,i}(2) points{1,i+1}(2) y];

end

plot(x,y,'y','LineWidth',2);

clear x y;

x=[0 0];y=[0 0];

for i=1:picnum-1

x = [points{2,i}(1) points{2,i+1}(1) x];

y = [points{2,i}(2) points{2,i+1}(2) y];

end

plot(x,y,'r','LineWidth',2);

clear x y;

x=[0 0];y=[0 0];

for i=1:picnum-1

x = [points{3,i}(1) points{3,i+1}(1) x];

y = [points{3,i}(2) points{3,i+1}(2) y];

end

plot(x,y,'b','LineWidth',2);

hold off

Labels:

ground truth,

groundtruth table,

tracking

Wednesday, November 3, 2010

Face Detection trials

Detecting head: while sift shows its robustness in scale and rotation, there is somewhat of an illumination problem

Clearer YOUTUBE videos

http://www.youtube.com/watch?v=mgr96oeXqH0

http://www.youtube.com/watch?v=lapux3e91_Y

http://www.youtube.com/watch?v=bMBNLPcLOVk

Matlab AVI video use

Matlab requires a RAW VIDEO file to be imported and used. however most cameras and recording devices use a compression to reduce file size and so on.

my trouble was an AVI file with a different codec. when i used AVIREAD in matlab, it gave me an error that it wasnt able to find the codedc or the frames are not known.

one option that is FREE:

use FFMPEG im downloading the executable for windows from this URL

ffmpeg is a command line tool, so in order to convert to a raw video to use in maatlab you use this command:

foo.avi is your compressed video file

bar.avi is your new raw video file name that you will use in MATLAB

my trouble was an AVI file with a different codec. when i used AVIREAD in matlab, it gave me an error that it wasnt able to find the codedc or the frames are not known.

one option that is FREE:

use FFMPEG im downloading the executable for windows from this URL

ffmpeg is a command line tool, so in order to convert to a raw video to use in maatlab you use this command:

c:/> ffmpeg -i foo.avi -vcodec rawvideo bar.avi

foo.avi is your compressed video file

bar.avi is your new raw video file name that you will use in MATLAB

Labels:

avi matlab,

ffmpeg,

matlab compression,

matlab ffmpeg,

raw video,

raw video matlab

Wednesday, October 27, 2010

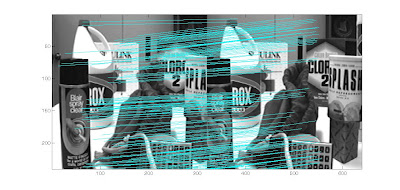

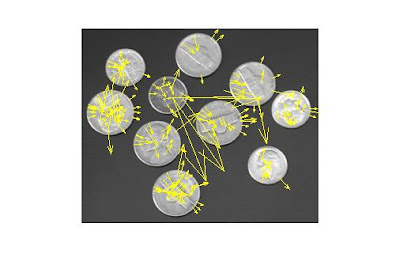

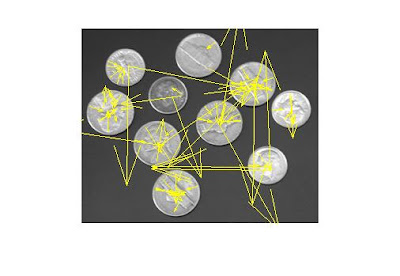

SIFT keypoint matching Trials of my Implementation

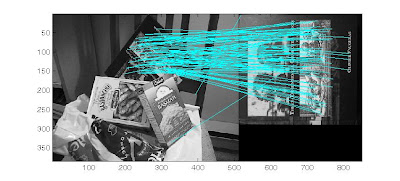

for my second experiment i tried translation invariance, 2 images taken from different angles. i got 243 matches

for my second experiment i tried translation invariance, 2 images taken from different angles. i got 243 matches In my next experiment i tried a complete affine transformation of a graffiti wall, i got 22 matches but not so accurate. this shows the keypoints have a problem in its rotation and scaling invariants. my magnitude and teta maybe the cause of this.

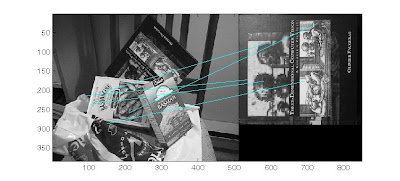

In my next experiment i tried a complete affine transformation of a graffiti wall, i got 22 matches but not so accurate. this shows the keypoints have a problem in its rotation and scaling invariants. my magnitude and teta maybe the cause of this. This is a matching from David Lowes algorithm showing a clear affine invariance.

This is a matching from David Lowes algorithm showing a clear affine invariance.

my implementation gives me a very bad matching. alot more work to do.

and more bad results, if the orientation of the keypoint is wrong, the matching will also suffer so i tired different tangents in matlab

atan(dy/dx);

BOTH IMAGES: show clearly that rotation invariance is not achieved, this is the reason why the matching gives alot of false negative results

furthermore; the amount of BLUR is also very important

images and details from (based on my understanding):

images and details from (based on my understanding):Lowe, David G. “Distinctive Image Features from Scale Invariant Keypoints”. International Journal of Computer Vision, 60, 2 (2004)

Subscribe to:

Posts (Atom)