rho = x*cos(theta) + y*sin(theta)

The variable rho is the distance from the origin to the line along a vector perpendicular to the line. theta is the angle between the x-axis and this vector.

The hough function generates a parameter space matrix whose rows and columns correspond to these rho and theta values, respectively. The houghpeaks function finds peak values in this space, which represent potential lines in the input image.

The houghlines function finds the endpoints of the line segments corresponding to peaks in the Hough transform and it automatically fills in small gaps.

The code:

clear all

close all

clc;

disp('Testing Purposes Only....');

%fin = 'sampleVideo.avi';

fin = 'smallVersion.avi';

fout = 'test2.avi';

avi = aviread(fin);

% Convert to RGB to GRAY SCALE image.

avi = aviread(fin);

pixels = double(cat(4,avi(1:2:end).cdata))/255; %get all pixels (normalize)

nFrames = size(pixels,4); %get number of frames

for f = 1:nFrames

pixel(:,:,f) = (rgb2gray(pixels(:,:,:,f))); %convert images to gray scale

end

rows=128;

cols=160;

nrames=f;

for l = 2:nrames

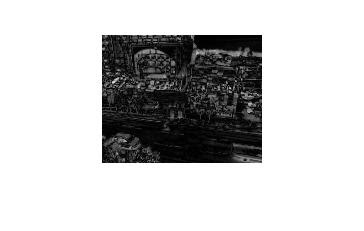

%edge detection

edgeD(:,:,l) = edge(pixel(:,:,l),'canny');

g(:,:,l) = double(edgeD(:,:,l));

%subtract background

d(:,:,l)=(abs(pixel(:,:,l)-pixel(:,:,l-1))); %subtract current pixel from previous

%convert to binary image

k=d(:,:,l);

bw(:,:,l) = im2bw(k, .2);

bw1=bwlabel(bw(:,:,l));

[H,theta,rho] = hough(edgeD(:,:,l));

P = houghpeaks(H,5,'threshold',ceil(0.1*max(H(:))));

x = theta(P(:,2));

y = rho(P(:,1));

lines = houghlines(edgeD(:,:,l),theta,rho,P,'FillGap',5,'MinLength',7);

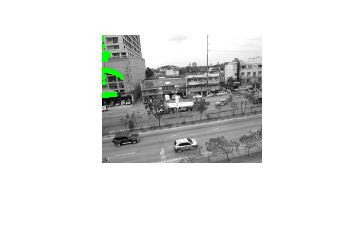

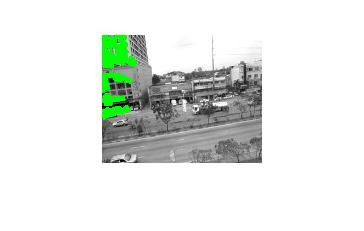

imshow(pixel(:,:,l)); hold on;

max_len = 0;

for k = 1:length(lines)

xy = [lines(k).point1; lines(k).point2];

plot(xy(:,1),xy(:,2),'LineWidth',2,'Color','green');

% Plot beginnings and ends of lines

plot(xy(1,1),xy(1,2),'x','LineWidth',2,'Color','yellow');

plot(xy(2,1),xy(2,2),'x','LineWidth',2,'Color','red');

len = norm(lines(k).point1 - lines(k).point2);

if ( len > max_len)

max_len = len;

xy_long = xy;

end

end

% highlight the longest line

plot(xy_long(:,1),xy_long(:,2),'LineWidth',2,'Color','cyan');

plot(xy_long(:,1),xy_long(:,2),'x','LineWidth',2,'Color','yellow');

plot(xy_long(:,1),xy_long(:,2),'x','LineWidth',2,'Color','red');

drawnow;

hold off

end

results:

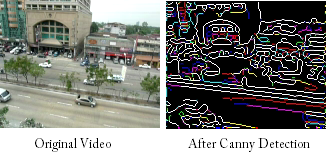

The same process on an Image:

%# load image, process it, find edges

I = rgb2gray( imread('pillsetc.png') );

I = imcrop(I, [30 30 450 350]);

J = imfilter(I, fspecial('gaussian', [17 17], 5), 'symmetric');

BW = edge(J, 'canny');

%# Hough Transform and show matrix

[H T R] = hough(BW);

imshow(imadjust(mat2gray(H)), [], 'XData',T, 'YData',R, 'InitialMagnification','fit')

xlabel('\theta (degrees)'), ylabel('\rho')

axis on, axis normal, hold on

colormap(hot), colorbar

%# detect peaks

P = houghpeaks(H, 4);

plot(T(P(:,2)), R(P(:,1)), 'gs', 'LineWidth',2);

%# detect lines and overlay on top of image

lines = houghlines(BW, T, R, P);

figure, imshow(I), hold on

for k = 1:length(lines)

xy = [lines(k).point1; lines(k).point2];

plot(xy(:,1), xy(:,2), 'g.-', 'LineWidth',2);

end

hold off